QnA Maker can automatically create a Bot on Azure that links to the knowledge base you have. Recently, I was asked many times that the QnA Bot created by the template does not display the follow-up prompt. The follow-up prompt is just a PREVIEW feature of QnA Maker. The QnA Bot template has not supported this follow-up prompt feature yet. Before Microsoft Bot Framework officially supporting follow-up prompt feature, we have to make code change in order to support it in our QnA Bot. In this article, I will show you how to implement the follow-up prompt by changing the Bot code.

Prerequisites

If you already reproduced the follow-up prompt not being displayed issue locally on your Visual Studio and Bot Framework Emulator, you can skip this Prerequisites section.

Create a knowledge base on https://www.qnamaker.ai/.

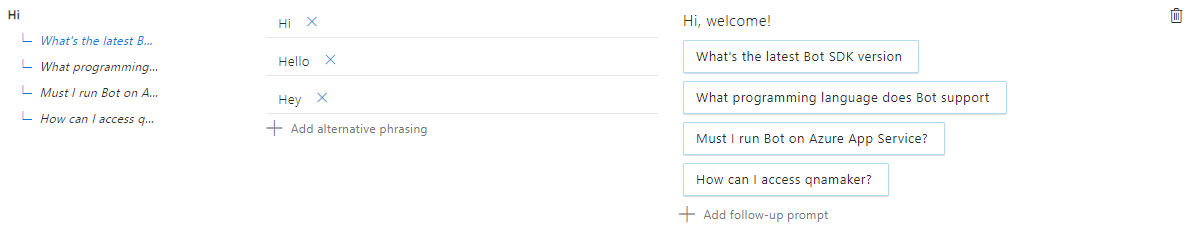

Ensure you have added at least one follow-up prompt:

Save and train, test the knowledge base on QnA Maker to check whether follow-up prompts appear.

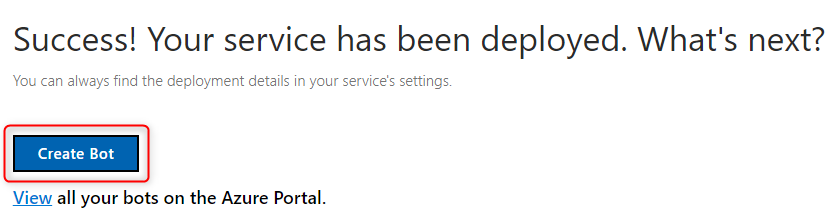

Publish the knowledge base and create a QnA Bot on Azure.

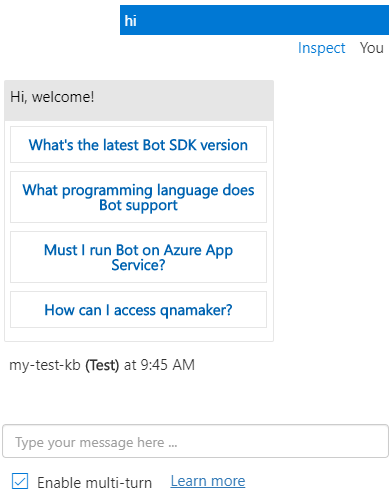

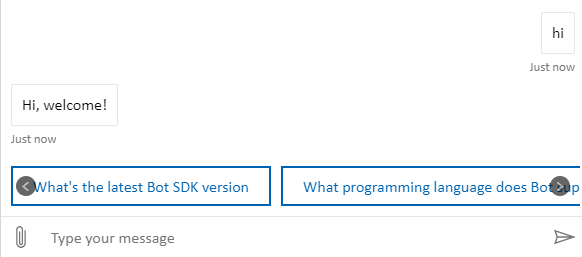

Test the Web Chat of the newly created QnA Bot, you will find it does not display the follow-up prompts.

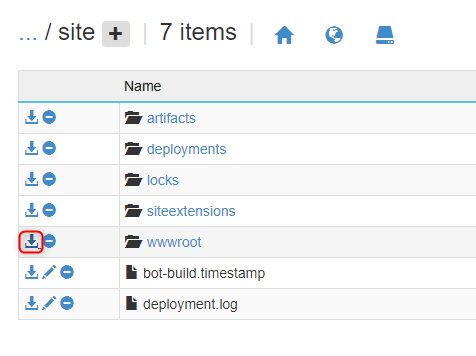

Now let's download the

wwwrootproject folder of the QnA Bot. You can download it through FTP, Git or Kudu Services. Assuming your Bot App Service URL ishttps://qna-followup-test.azurewebsites.net/, the Kudu Services entry URL would behttps://qna-followup-test.scm.azurewebsites.net/(just add.scmbefore.azurewebsites). Go to theDebug console->CMD, then cd to thesitefolder, you can click the download button to download thewwwrootfolder in a zip file.

In

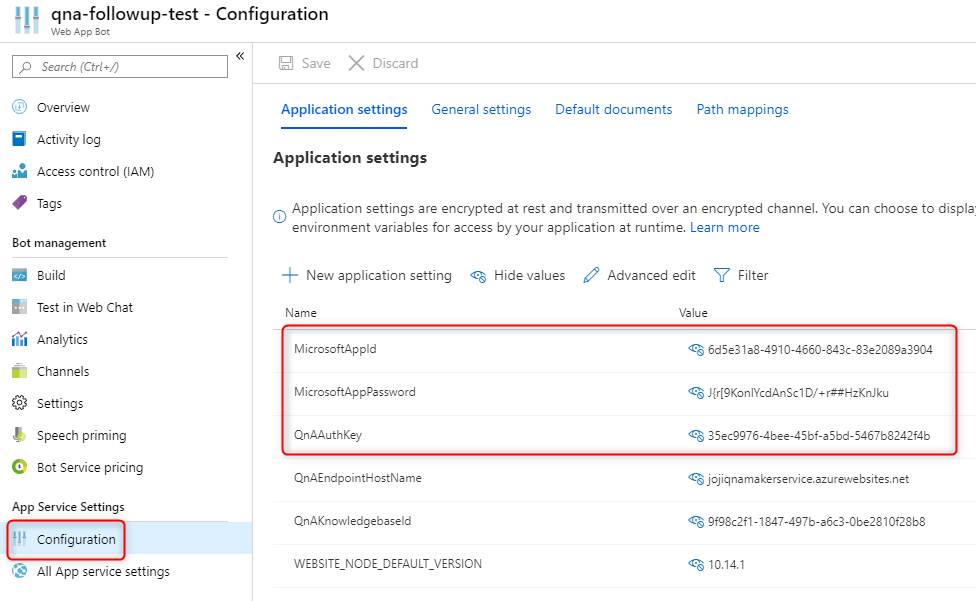

wwwrootfolder, openQnABot.slnin Visual Studio. Editappsettings.json, fill in theQnAKnowledgebaseId,QnAAuthKeyandQnAEndpointHostName. You can find these values fromQnA Bot->Configurationpage.

appsettings.json:

{ "MicrosoftAppId": "", "MicrosoftAppPassword": "", "QnAKnowledgebaseId": "9f98c2f1-1847-497b-a6c3-0be2810f28b8", "QnAAuthKey": "35ec9976-4bee-45bf-a5bd-5467b8242f4b", "QnAEndpointHostName": "jojiqnamakerservice.azurewebsites.net" }Press F5 to run and debug the QnA Bot locally on your Visual Studio. Use

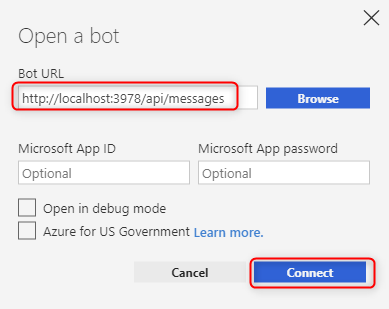

Bot Framework Emulatorto connect to your local Bot and test it. ForBot Framework Emulatorusage, you can find it from this tutorial.Connect

Bot Framework Emulatorto your local Bot URL:

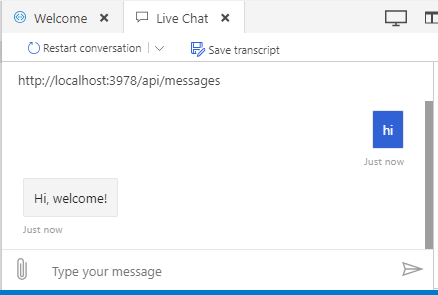

Now we reproduced the follow-up prompts not being displayed issue locally in

Bot Framework Emulator:

Theory

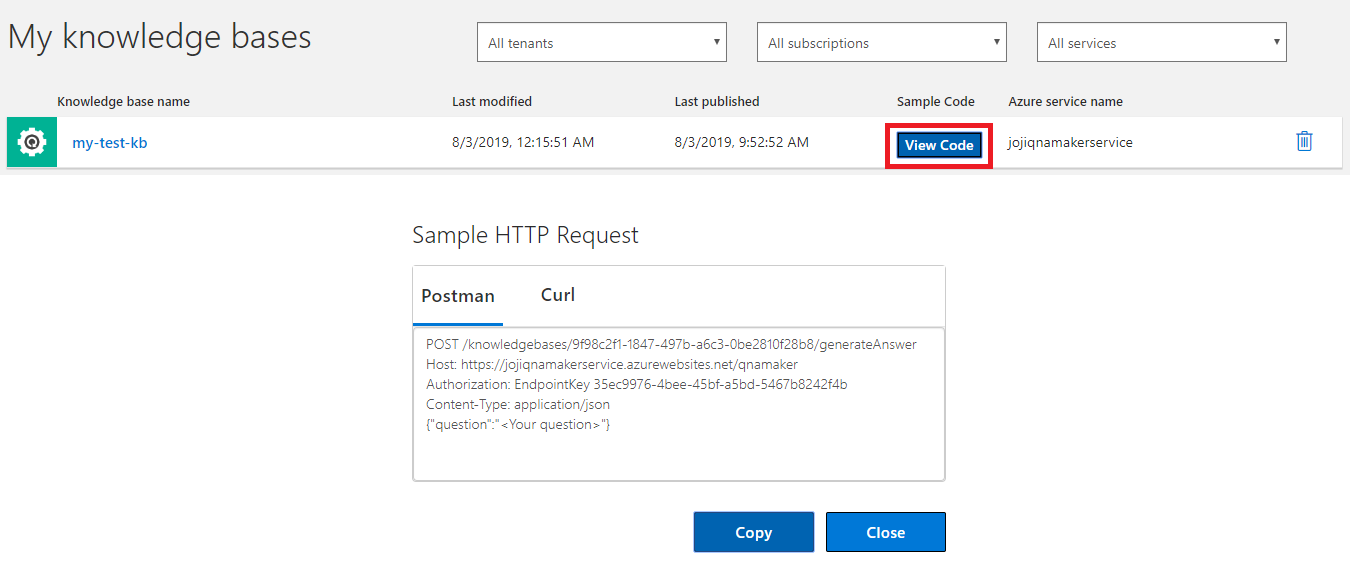

Go to your knowledge base and click View Code button you will find the HTTP API to query a question.

The qnamaker.ai recommends you to use Postman or Curl to test the API. I would recommend you to use REST Client, a powerful HTTP client extension in Visual Studio Code to test the API.

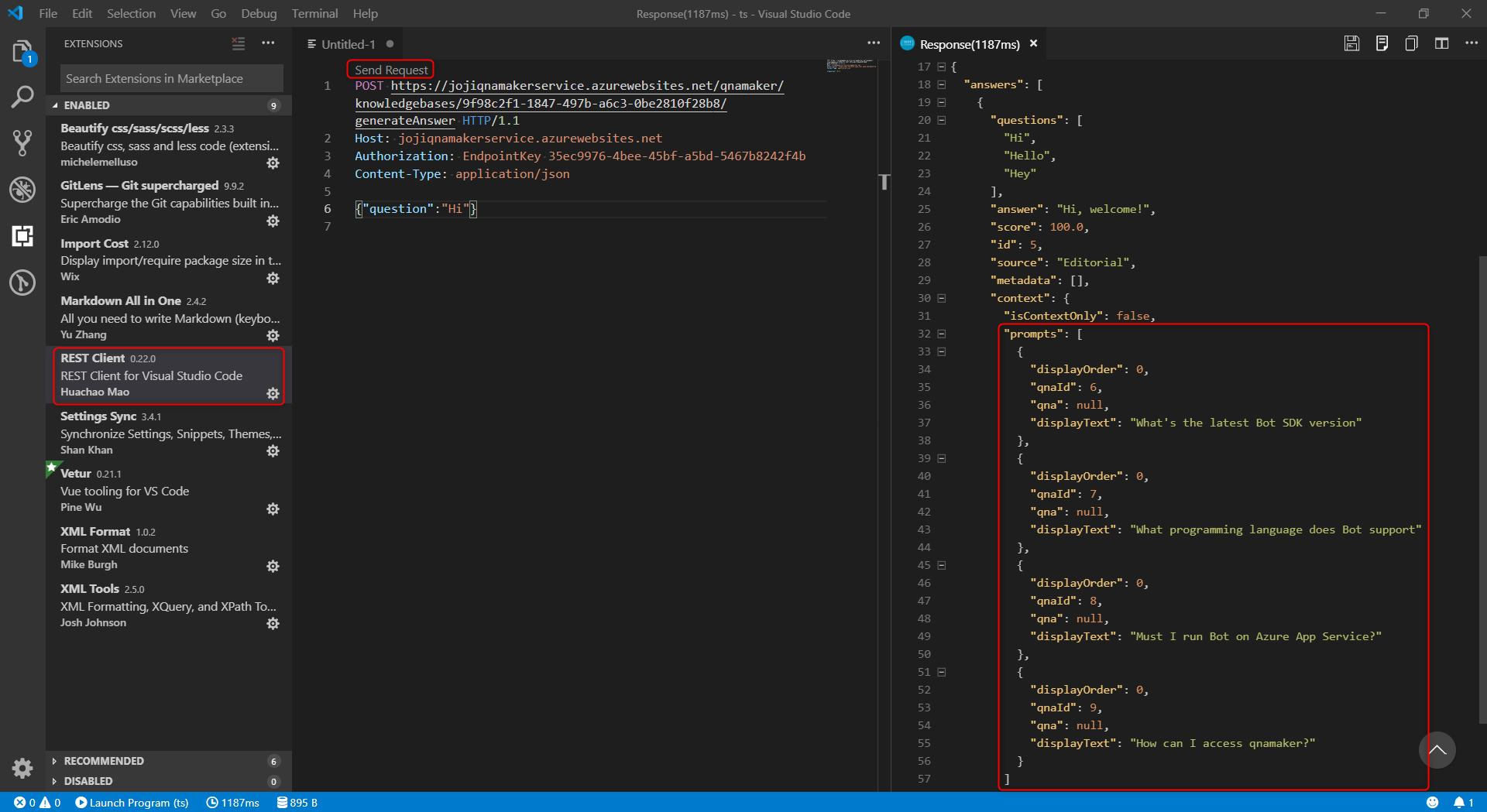

As you can see below, actually the follow-up prompts are already included in the JSON response.

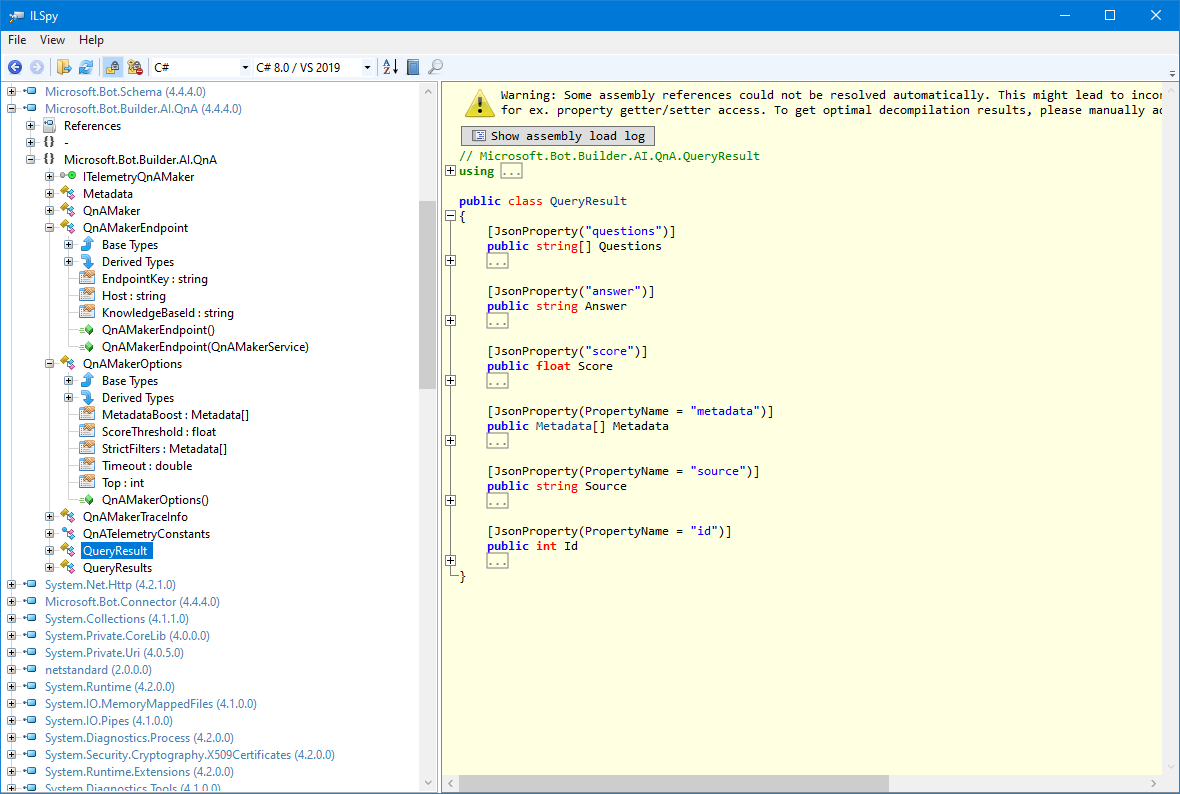

However, the QnA Bot template references Microsoft.Bot.Builder.AI.QnA.dll (4.4.4.0) to handle the QnA query, after decompiling this dll in ILSpy, we got proof that it does not read the context of the JSON response at all. Which means this version of the Microsoft.Bot.Builder.AI.QnA.dll does not support follow-up prompts by design.

Since this is a Microsoft published DLL, we cannot directly modify it. So, my solution is to query the QnA Maker service once more to extract the follow-up prompts, if there is any follow-up prompts, then add these prompts to a CardAction to display.

Implementation (C#)

Let's edit the

Bots\QnABot.cs, add following namespaces we will use to support follow-up prompts:using Newtonsoft.Json; using System.Collections.Generic; using System.Text;Add following classes to match the JSON response shape:

class FollowUpCheckResult { [JsonProperty("answers")] public FollowUpCheckQnAAnswer[] Answers { get; set; } } class FollowUpCheckQnAAnswer { [JsonProperty("context")] public FollowUpCheckContext Context { get; set; } } class FollowUpCheckContext { [JsonProperty("prompts")] public FollowUpCheckPrompt[] Prompts { get; set; } } class FollowUpCheckPrompt { [JsonProperty("displayText")] public string DisplayText { get; set; } }After the

qnaMaker.GetAnswersAsyncsucceeds and there is valid answer, perform an additional HTTP query to check the follow-up prompts:// The actual call to the QnA Maker service. var response = await qnaMaker.GetAnswersAsync(turnContext); if (response != null && response.Length > 0) { // create http client to perform qna query var followUpCheckHttpClient = new HttpClient(); // add QnAAuthKey to Authorization header followUpCheckHttpClient.DefaultRequestHeaders.Add("Authorization", _configuration["QnAAuthKey"]); // construct the qna query url var url = $"{GetHostname()}/knowledgebases/{_configuration["QnAKnowledgebaseId"]}/generateAnswer"; // post query var checkFollowUpJsonResponse = await followUpCheckHttpClient.PostAsync(url, new StringContent("{\"question\":\"" + turnContext.Activity.Text + "\"}", Encoding.UTF8, "application/json")).Result.Content.ReadAsStringAsync(); // parse result var followUpCheckResult = JsonConvert.DeserializeObject<FollowUpCheckResult>(checkFollowUpJsonResponse); // initialize reply message containing the default answer var reply = MessageFactory.Text(response[0].Answer); if (followUpCheckResult.Answers.Length > 0 && followUpCheckResult.Answers[0].Context.Prompts.Length > 0) { // if follow-up check contains valid answer and at least one prompt, add prompt text to SuggestedActions using CardAction one by one reply.SuggestedActions = new SuggestedActions(); reply.SuggestedActions.Actions = new List<CardAction>(); for (int i = 0; i < followUpCheckResult.Answers[0].Context.Prompts.Length; i++) { var promptText = followUpCheckResult.Answers[0].Context.Prompts[i].DisplayText; reply.SuggestedActions.Actions.Add(new CardAction() { Title = promptText, Type = ActionTypes.ImBack, Value = promptText }); } } await turnContext.SendActivityAsync(reply, cancellationToken); } else { await turnContext.SendActivityAsync(MessageFactory.Text("No QnA Maker answers were found."), cancellationToken); }Test it in

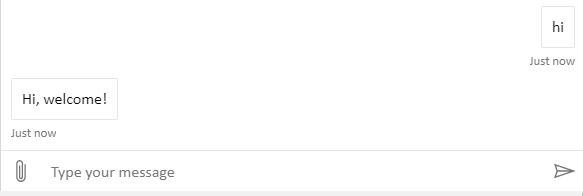

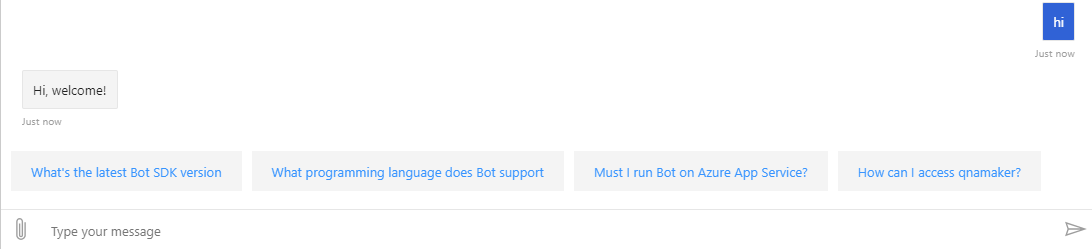

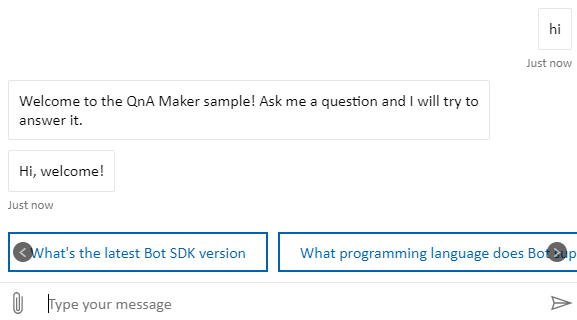

Bot Framework Emulator, now it displays the follow-up prompts as expected:

Published to Azure App Service, and test in Web Chat:

Implementation (NodeJS)

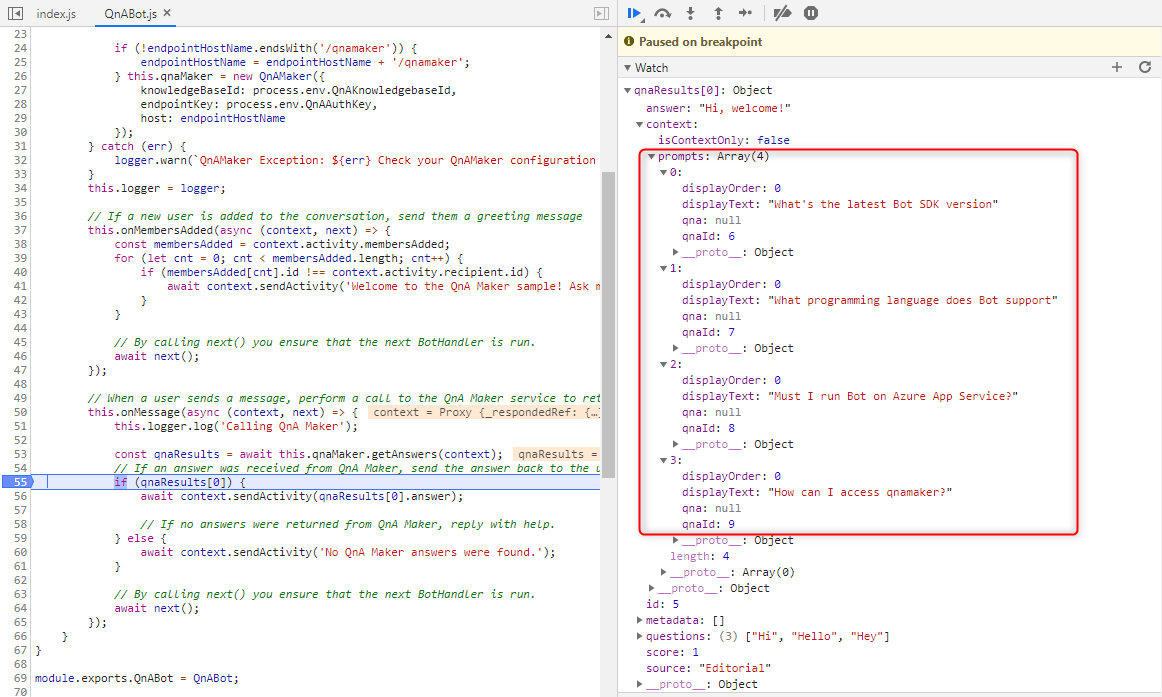

For NodeJS, it is much easier than C# to implement the follow-up prompt because the qnaResults[0] exposes the full JSON response including the context.prompts we are looking for.

Therefore, we don't need to send additional HTTP request to the QnA Maker service. We just need to use MessageFactory to reconstruct the reply message.

Import

MessageFactoryfrombotbuilder.const { ActivityHandler, MessageFactory } = require('botbuilder');Reconstruct the reply message in

onMessageas below:let reply = MessageFactory.suggestedActions( qnaResults[0].context.prompts.map((prompt) => { return prompt.displayText; }), qnaResults[0].answer ); await context.sendActivity(reply);Test it in

Bot Framework Emulatorand deploy it to Azure App Service:

Attentions

This can only be used as a workaround before

Bot Frameworkofficially supporting QnA follow-up prompts. I don't have a timeline of whenBot Frameworkwill have built-in support for follow-up prompt, I will update this article once it is supported.The C# implementation will make one duplicate HTTP request to the QnA Maker service.

This implementation does not support the

Context-onlyoption in follow-up prompt, but you can modify my implementation to support it if you need this feature.