I was recently developing a text file parser based on File Reader API in browsers. To prevent the heavy file parsing job from blocking the UI thread, using Web Worker to parse the file would be the best choice. Web Worker needs to parse the text file into lines and transfer each line to the main thread. We will encounter performance issues on transfer speed and memory usage when Web Worker needs to pass hundreds of thousands, or even millions of lines. Most browsers implement the structured clone algorithm that allows you to pass more complex types in/out of Web Worker such as File, Blob, ArrayBuffer, and JSON objects. However, when passing these types of data using postMessage(), a copy is still made. Therefore, if you are passing a large 100MB file, there's a noticeable overhead in getting that file between the worker and the main thread. To solve this problem, postMessage() method was designed to support passing Transferable Objects. When passing Transferable Objects, data is transferred from one context (worker thread or main thread) to another without additional memory consumption, and the transfer speed is really quick since no copy is made. However I still see notable performance issue when Web Worker needs to pass massive Transferable Objects.

Transfer using structured clone

First, let's see how Web Worker transfer 500MB data using structured clone algorithm by default.

var data = new Uint8Array(500 * 1024 * 1024);

self.postMessage(data);

Test results:

| Browser | Time Taken | Final Memory Usage |

|---|---|---|

| Chrome | 149ms | 1042MB |

| Edge | 455ms | 1048MB |

| Firefox | 380ms | 1079MB |

The memory usage of each browser increased to 1GB when transfer completed since data object was copied and then transferred to the main thread, we can access the data from both contexts.

Transfer using Transferable Objects

Now let's see how to pass 500MB transferable object.

var data = new Uint8Array(500 * 1024 * 1024);

self.postMessage(data, [data.buffer]);

Test results:

| Browser | Time Taken | Final Memory Usage |

|---|---|---|

| Chrome | 1ms | 531MB |

| Edge | 0ms | 537MB |

| Firefox | 0ms | 549MB |

The memory usage of each browser kept around 500MB and did not increase anymore since data object was passed from worker context to main context, if you ran data.length in worker context after transfer completed, you will get 0. Since no copy was made, the transfer speed was really fast.

Performance issue of massive transferable objects

Based on the test results above, using Transferable Objects for worker communication would be a wise choice if you need to transfer only one (or small number of) large size object and there is no need to access the object in original context.

However, the text parser I am developing needs to parse the text file into lines in Web Worker and then pass the lines to the main context. When the line number is very big, there is notable performance degradation.

Let's see following test method, transferByLine accepts two arguments, first one is the line number to test, the second one is whether to use Transferable Objects.

function transferByLine(line, transferable) {

var arr = [];

var bufferArr = [];

for (var i = 0; i < line; i++) {

arr[i] = new Uint8Array(100);

if (transferable) {

bufferArr.push(arr[i].buffer);

}

}

console.log('Successfully created the array. The array has ' + line + ' items, each item size is 100 bytes');

console.log('Start transferring...');

var startTime = new Date().getTime();

if (!transferable) {

self.postMessage(arr);

} else {

self.postMessage(arr, bufferArr);

}

var timeTaken = new Date().getTime() - startTime;

console.log('Tranfer completed in ' + timeTaken + 'ms.');

}

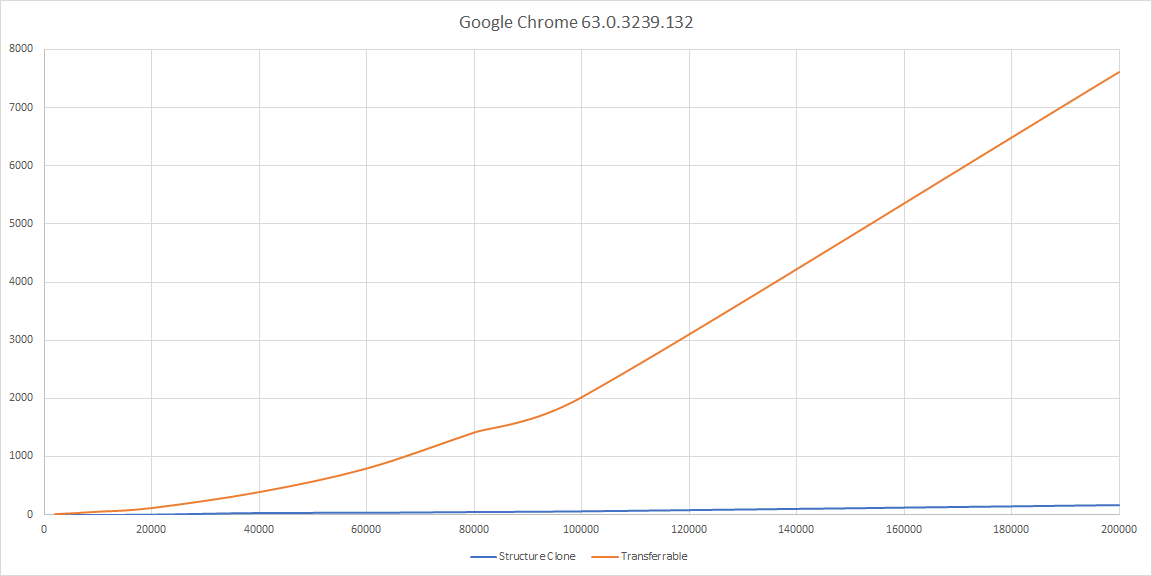

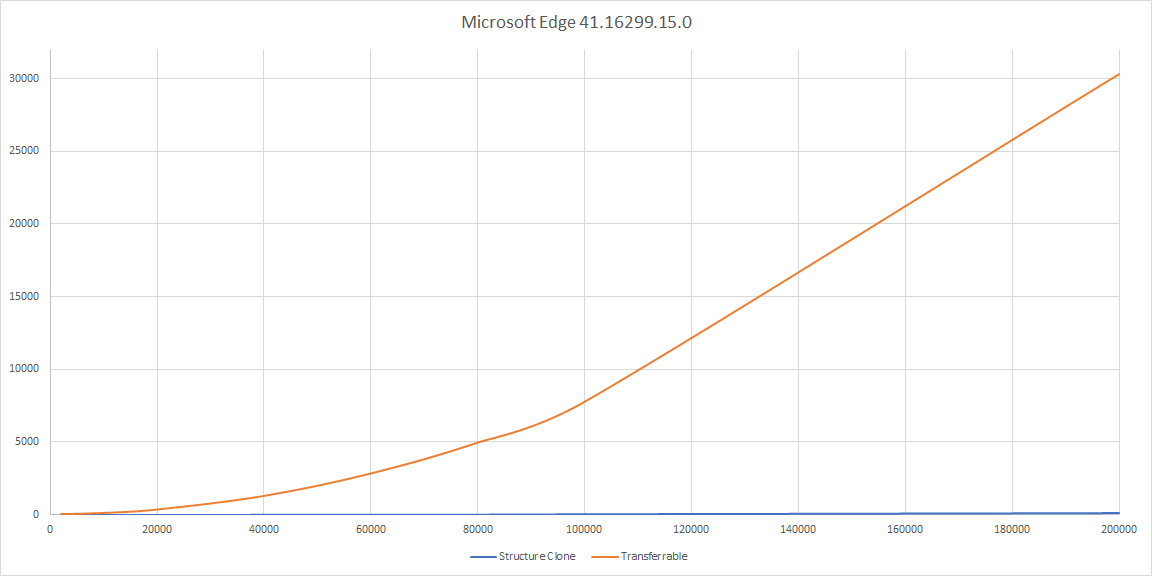

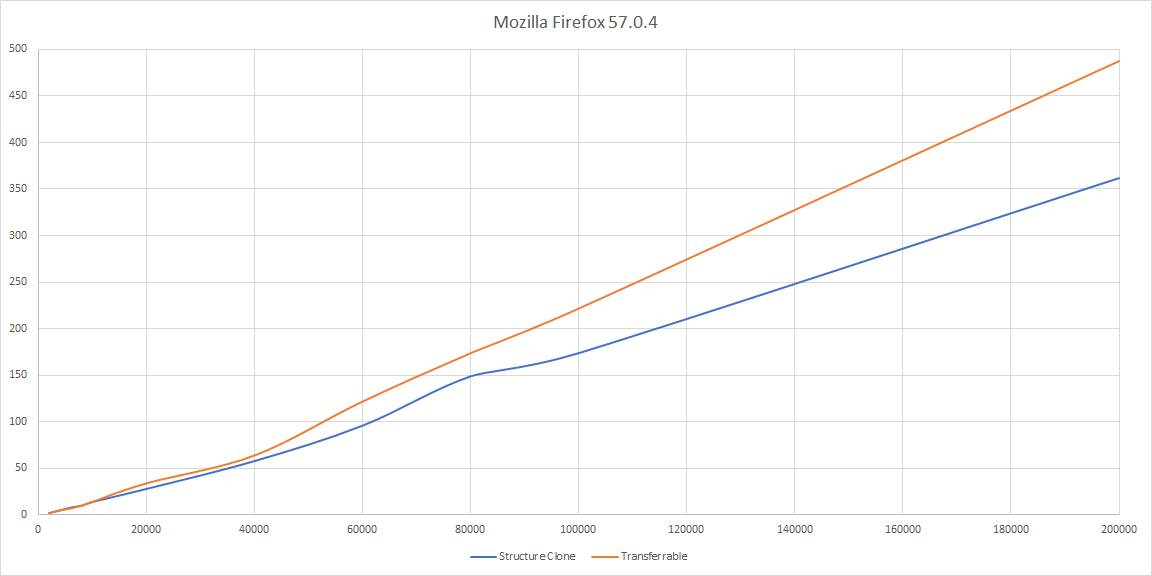

Here is the test results in three different browsers:

| 2000 lines | 4000 lines | 6000 lines | 8000 lines | 10000 lines | 20000 lines | 40000 lines | 60000 lines | 80000 lines | 100000 lines | 200000 lines | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Chrome (Clone) | 1 | 2 | 2 | 3 | 5 | 9 | 36 | 40 | 53 | 65 | 170 |

| Chrome (Transferable) | 3 | 12 | 21 | 35 | 44 | 109 | 383 | 789 | 1408 | 2015 | 7609 |

| Edge (Clone) | 1 | 3 | 3 | 3 | 4 | 8 | 15 | 22 | 27 | 46 | 108 |

| Edge (Transferable) | 6 | 19 | 37 | 63 | 88 | 329 | 1266 | 2814 | 4940 | 7759 | 30341 |

| Firefox (Clone) | 2 | 5 | 8 | 10 | 14 | 28 | 58 | 96 | 149 | 174 | 362 |

| Firefox (Transferable) | 2 | 5 | 7 | 10 | 14 | 34 | 64 | 122 | 174 | 222 | 488 |

Make the test results to following charts to better view how performance changes with the line number increase:

Based on the test results above, we can see:

- The transfer speed of Chrome and Edge decreases exponentially when using Transferable Objects.

- The Transferable Objects speed of Firefox has the same trend as Structured Clone (linear decrease)

I believe Chrome and Edge spend a lot of time on parsing the second argument of postMessage() method (array of ArrayBuffer) to map the first argument (data) when there are massive Transferable Objects. Apparently, Firefox was optimized for this part.

Summary

When Web Worker needs to pass large size of data and the data is stored in few variables, then we can safely use Transferable Objects for data transfer. If the data is stored in a large size array, then using Structured Clone transfer is much quicker (in Chrome and Edge). If we decide to use Structured Clone transfer, we may need to manually release/break any variable reference to the data source in order to let browser garbage collector to recycle the memory.